PLM Data Migration is a complex discussion topic since it has a broad footprint and an unclear boundary. A common data migration myth – it should be easy to move data from a source and map to a destination. Here are the reasons for complexity: Source data that needs to be migrated into PLM was never managed in a “PLM Platform,” duplicate information resides in multiple systems that are defined in scope as ‘Source,’ old ways of doing things no longer apply in a new PLM environment, to name a few. These are why it is important to give equal importance to Data Migration as you would trying to define a business practice or data model.

Context and Scope

In this context, the goal is to make you aware of data migration complexities and plan accordingly. This is not a technology recommendation or approach to data migration but a guide to critical factors that need to be considered. We’ll discuss what is categorized as PLM data and where it will find its home in the future. Somewhere in the process, the data needs to be ‘transformed’ and ‘cleansed’ before it's usable.

Data Migration trap

When you are ready to migrate to a new PLM system, the following must be considered part of estimating the Data Migration scope. First recommendation: It is essential to engage a PLM Data expert to define scope; let us not rely on an ETL expert. Here are the data migration Tenets.

| Topic | Description | Our Experience |

| Source systems | Identify all source systems that contain PLM data. PLM data is defined as what you propose to move to new PLM systems, As mentioned previously, they could reside in other enterprise systems or on shared drives and share point. | Our customers are asking us to merge ERP, Document Management, and PDM data to make it PLM! |

| Non-PLM sources | Commonly requested non-PLM data sources are Excel files and Word documents in shared drives, ERP Systems, Quality Management systems (in a medical context), CAD/PDM Systems, CAD files on disk, etc. The common reference is ‘managed’ vs ‘unmanaged.’ |

Trackwise, Master control, Epicor, Documentum are a few repositories that we have encountered and numerous file formats in case of ‘unmanaged’ data. |

| Legacy | All or Latest? Should you decide to leave a decade worth of previously release information that no one uses? |

An important decision that no one can agree on: Should all revisions be migrated or just the latest? Regulated companies may have a different answer based on retention policies Our experience shows that the answer may be different for CAD data vs non-CAD data. |

| Archival strategy | Do you plan to retire the legacy system after migration? Can you keep your legacy system in view-only mode? What do you do with data that are not migrated? Is Archival strategy a part of the Data Migration effort? |

Our first-generation Archival tool has been sufficient for a decade that focused on CAD data and complex relationships. We are about to release a NextGen Archival tool that could facilitate search and retrieval by various criteria Retention policies are not well defined within many organizations. |

| Transformation | You must engage the business users to classify and cleanse the data. In many meetings, I have listened to users: That’s old ways of doing things, or the data does not belong there, or that’s legacy information, or that is from previous acquisition, etc. How much bad data will be migrated? All of the following scenarios must be carefully evaluated during transformation: • Data duplication and merging data • Rules for revisions • Rules for State |

We are exploring advanced AI-based rules. |

| Downtime | From a downtime perspective, should the migration cutover happen over a weekend, or can it extend longer? | Weekend migrations are becoming a must. |

| Timing | What is the appropriate time to allocate resources for the data migration track? At the beginning of the project, during the course, or towards the end. If you wait until the data model matures, it may be too late. If you start when the project is kicked off, it may be too early. |

Our experience shows that the Data Migration activities must ramp up from day 1. |

| Full vs incremental | Incremental in this context refers to a subset of data that is migrated to a new target and the rest is used in the existing system until it is time to migrate. This typically lends itself to the following issues: • Two systems may now have the same data being altered • Duplicate data clean up when you are ready to migrate • What risk are you trying to avoid by making this decision? Risk of using a new system and process? • One data source at a time does not fall under this category |

Incremental is expensive. Could lead to data contention and the effort takes its own life. Where possible, do a full migration. Data migration from different sites or different PLM instances is not considered ‘Incremental.’ |

| Skills | What skill sets are required? It is unlikely one person knows both legacy PLM and future state PLM to a depth that is required for migration Additional complexity is presented when one person orchestrates meetings with both technology and business expertise. |

Best of breed approach. It has to be a team effort! |

| Training | Training users to see the same data in a different way. | We have recommended a dedicated person for this effort. |

| Transactional data | Many legacy system stores Audit trail information focusing on tracking who is performing a certain activity. Can you imagine logging every event for 5000 users over a decade? There is other information like Workflow history and event queues that are managed by software tools, and they are not good candidates for migration. |

Sounds like a good candidate to be archived. |

| Advanced technologies | Using AI/ML-based technologies for data cleansing and transformation Example: Parts classification was not commonly available many years ago. Everyone introduced their own attributes. When you transition to next-generation PLM, the data should be transformed and classified to best use the new features. |

Our AI engine (HOLMES) will be integrated with our Data Migration processes and tools to recognize patterns and suggest a classification. |

| Managing dependencies | It has become common for multiple vendors and contractors to be involved in a complex migration. In 80% of our migrations, we have run into the following: Infrastructure is managed by a group, legacy PLM is managed by another group, Future state PLM yet another vendor, PLM implementation is running in parallel, A different project manager is managing business users, etc. | Must have a strong technical project manager capable of understanding the various dependencies. |

| Implementation | Every organization should understand how you want the system to improve your work processes after migration; how does one take advantage of the change? | Customers are looking at data migration and IT exercises. Without a focus on Implementation and Training, the end result is a disappointment. |

Make Vs. Buy

I have seen many companies contemplating if they should build their own Data Migration tools. The rationale is entertaining and puzzling. Here are some good ones to share:

a. we do not want to expose data to external consultants to process confidential data

b. we know our data better than someone from outside

c. how can you go wrong by selecting an off-the-shelf ETL tool?

d. we migrate complex ERP data for a living, PLM should have less complexity

Let us dive a little deeper into this topic. ETL tools are a good starting point for transactional data that must be moved from one source to another. PLM is more complex than that. There’s core expertise required to be mapping the data: CAD, BOM, Document hierarchy, Drawings, Change items, Audit history, Revisions, Versions, etc. More importantly, it is impossible to make good recommendations if someone does not understand the capability being considered.

My strong view: Don’t build data migration tools; hire experts. Don’t focus on technology; focus on business issues and data fidelity. Bring in the movers; why build a truck?

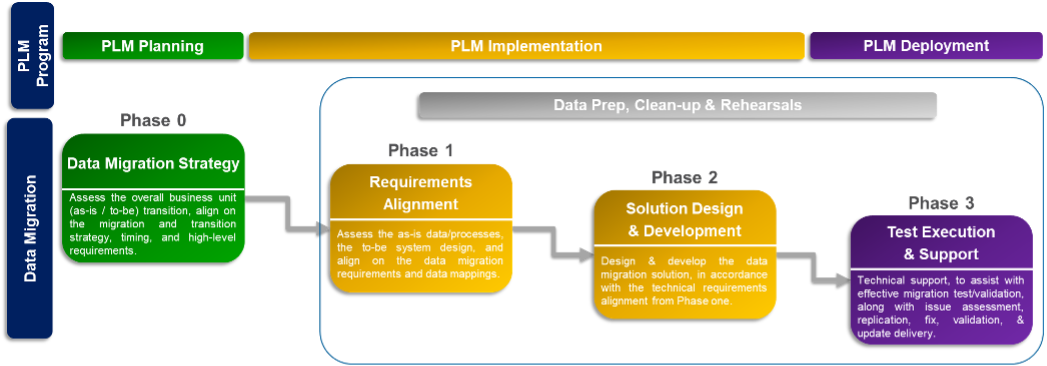

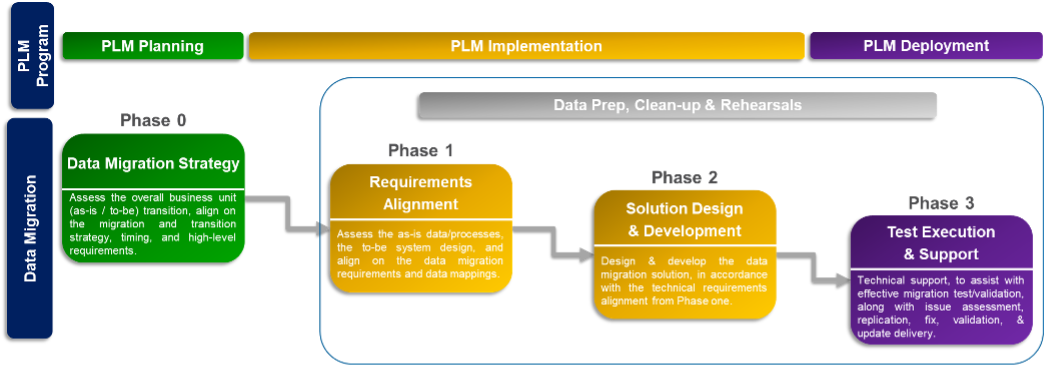

Our proven methodology begins with a high-level Questionnaire of what data needs to be migrated, followed by a Data Analysis using our DM export Tools, and finally, the scope definition. Our implementation phases will perform Data Mapping and execute Data Migration Iterations. We will align ourselves to the PLM program timeline.

Summary

Data Migration is binary: either works or does not work. Data is either accurate or incorrect. There is no in-between.

Practically, 20 years of legacy data and process triggered by a transformation program could take 12-18 months of focused effort to migrate data; The cost could be as high as 10-20% of overall PLM spend.

Our differentiator: Easily understood and proven methodology, PLM Domain expertise, and robust technology. We have helped 150 other customers to be successful. Could we add you to the list?